Performance Engineering Platform

Performance Engineering Platform

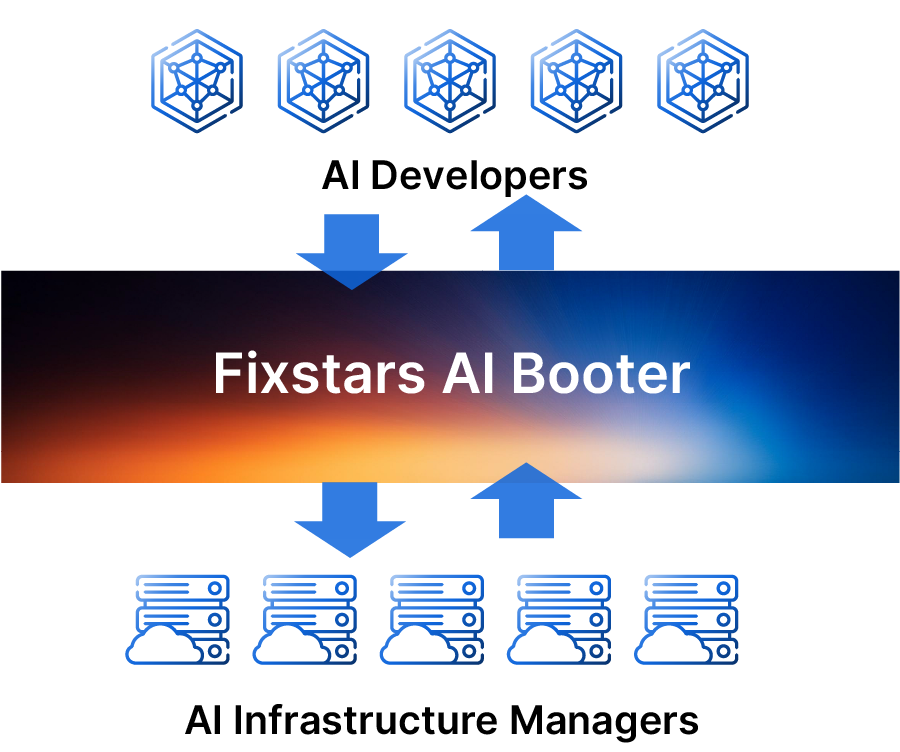

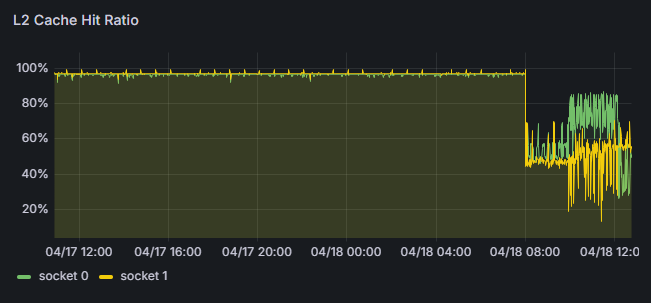

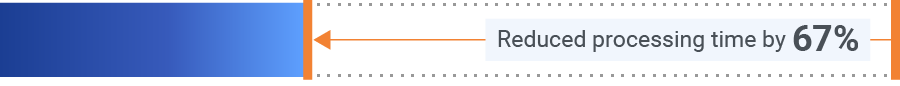

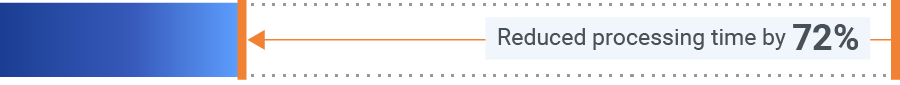

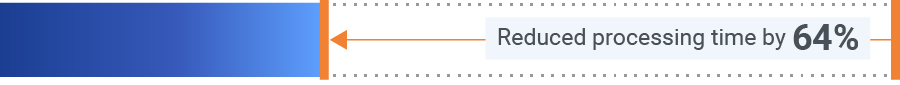

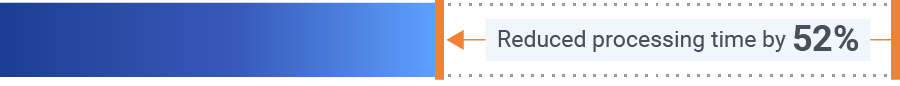

Fixstars AIBooster

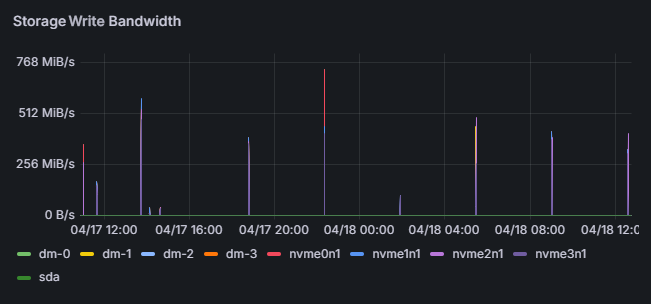

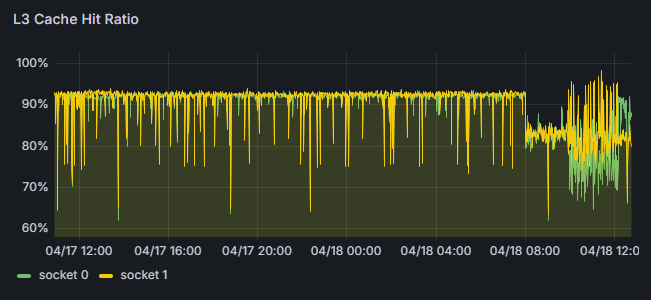

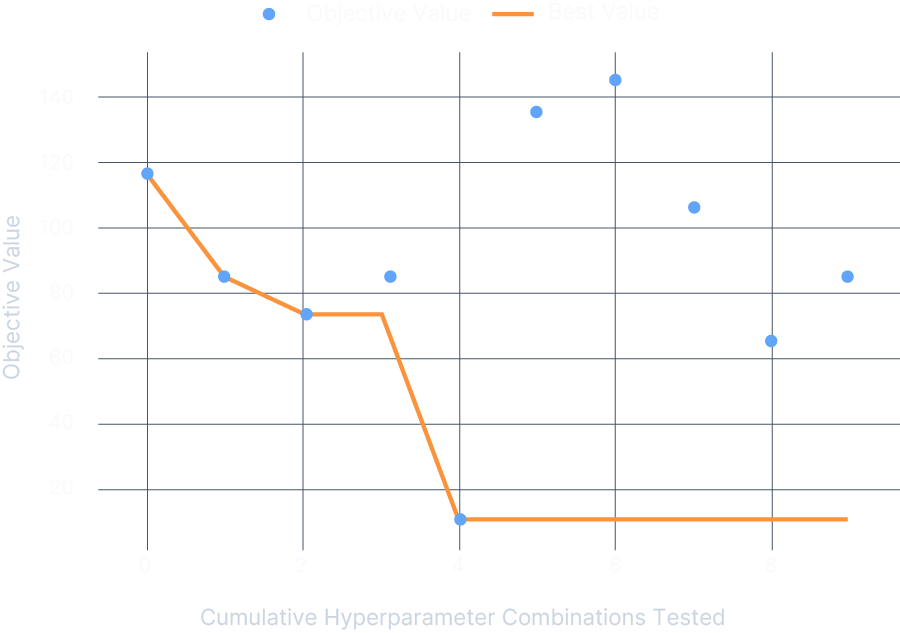

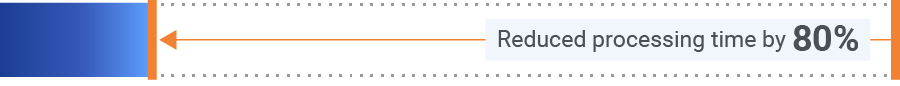

Install on your GPU server and let it gather runtime data from your AI workloads. It identifies bottlenecks, automatically enhances performance, and gives you detailed insights to achieve even greater manual optimization.