What is Performance Engineering?

Performance Engineering is a discipline focused on continuously improving and ensuring system performance to align with specific business objectives. Optimization requirements vary depending on the system, but common goals include:

Processing Performance

Enhancing data processing bandwidth and increasing throughput.

Responsiveness

Shortening user response times and reducing latency.

Efficiency

Improving power efficiency and maximizing "performance per watt."

Economic Viability

Improving cost-effectiveness and reducing Total Cost of Ownership (TCO).

From embedded devices to supercomputers, performance engineering is a core technology that significantly influences the competitiveness of products and services across all scales of systems.

Embedded Devices

- Extending battery life;

- operating with minimal memory and power consumption.

PC

- Achieving a smooth, responsive user experience;

- reducing wait times.

Cloud Computing

- Reducing operational costs;

- optimizing resource utilization.

Supercomputers

- Reaching peak performance;

- maximizing power efficiency.

Why Performance Engineering Now?

While performance improvement is not a new concept, the emergence of Generative AI has made the strategic practice of "Performance Engineering" more critical than ever.

Four key drivers are accelerating the demand for "faster, cheaper, and more efficient" systems to unprecedented levels:

-

Reduced Time-to-Market

AI model development is time-intensive. Optimizing training to achieve the same accuracy with fewer computations allows for more iterations and faster product cycles, enabling companies to launch new models and services ahead of the competition.

-

Optimized Operational Costs

Training and inference for large-scale AI models require massive computational resources, leading to significant expenses. Optimization is essential for directly reducing these costs and improving business profitability and sustainability.

-

User Experience and Real-Time Requirements

For applications like AI assistants or autonomous driving systems where real-time response is critical, even minor latency can severely impact user experience and safety.

-

Environmental Considerations

The rising energy consumption associated with AI workloads is a growing environmental concern. Improving power efficiency is increasingly viewed as a vital part of corporate social responsibility (CSR).

Performance Engineering in Generative AI

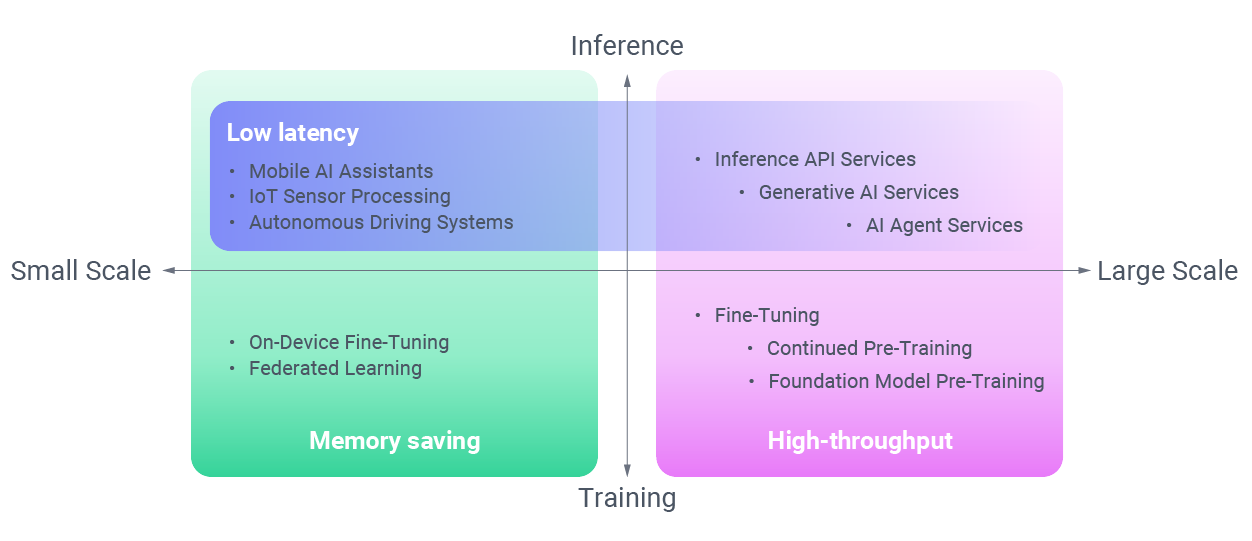

When looking at Generative AI through the lens of "Scale × Use Case," there are four typical patterns. Each requires focusing on different performance metrics, such as memory efficiency, high throughput, or low latency.

Small Scale × Inference

Mobile AI Assistants, IoT Sensor Processing, Autonomous Driving Systems.

Large Scale × Inference

Inference API Services, Generative AI Services, AI Agent Services.

Small Scale × Training

On-Device Fine-Tuning, Federated Learning.

Large Scale × Training

Fine-Tuning, Continued Pre-Training, Foundation Model Pre-Training.

Principles of Success: The "Observe & Improve" Cycle

Performance engineering is an iterative process of observing and improving. First, measure precisely; then, optimize effectively. This continuous loop is what drives performance higher.

-

Select the Right Environment

Choose a measurement environment that is identical or as close as possible to the production environment. -

Control Side Effects

Minimize the overhead of measurement tools and code, or account for that overhead when analyzing results. -

Handle Variance Correctly

Identify the cause of any measurement noise. If it can be ignored as an error, use appropriate statistical values (like median or average).

-

Set Target Goals

You cannot exceed theoretical performance, and the effort required increases exponentially as you approach it. -

Address the Critical Bottleneck

Focus optimization resources where they matter most. Improving non-dominant processes yields minimal overall gains. -

Re-evaluate the Need for Processing

Sometimes, eliminating a process entirely is more effective than making it faster.

Fixstars' Performance Engineering Offerings

Implementing performance engineering requires specialized knowledge, tools, and talent.

Based on over 20 years of experience, Fixstars offers three approaches tailored to your challenges:

Fixstars AIBooster

Simply install this on your GPU servers to continuously monitor AI/LLM workloads. It automatically detects bottlenecks, improves processing speed through optimization, and reduces GPU costs.

- Burdened by rising AI processing and infrastructure costs

- Aiming to increase AI development efficiency

- Seeking better ROI from existing GPU infrastructure

Fixstars AIStation

An all-in-one environment featuring high-performance GPU workstations pre-installed with the latest LLMs and applications. It allows for secure, local LLM operations immediately upon delivery.

- Restricted from using external services due to security policies

- Developing AI models with sensitive, proprietary data

- Requiring on-site, unlimited access to the latest GPUs

Software Acceleration Services

Our expert engineers, equipped with deep hardware knowledge, analyze your existing code. We perform everything from algorithm refinement to hardware-specific optimization to unlock potential of your computing resources.

- Struggling with performance that falls short of business needs

- Seeking fundamental algorithmic overhauls

- Looking for a hands-on development partner